Introduction

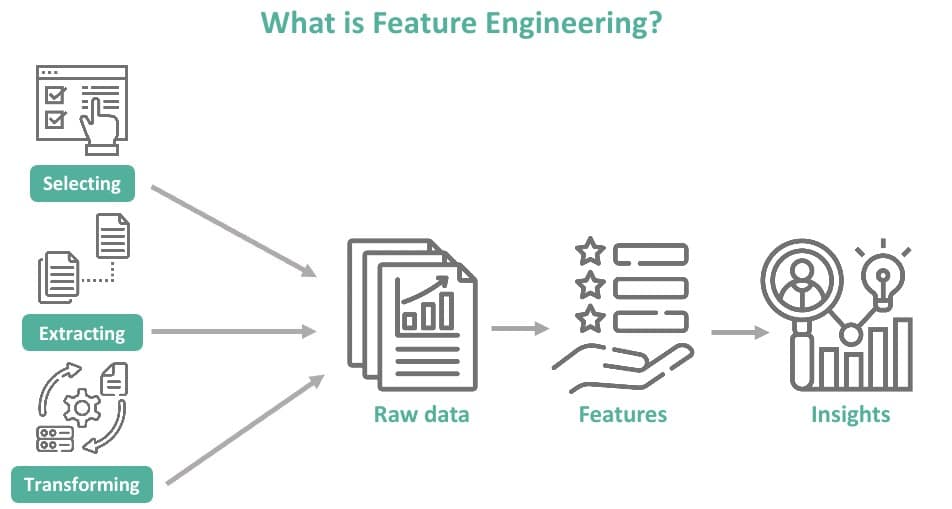

Welcome to our in-depth exploration of feature engineering, a crucial aspect of building effective predictive models in data science. This blog will delve into the art and science of feature engineering, discussing how the right techniques can significantly enhance the performance of machine learning models. We’ll explore various methods of transforming and manipulating data to better suit the needs of algorithms, thereby unlocking the full potential of predictive modeling.

- Focus on Feature Engineering: The key to unlocking predictive modeling potential.

- Enhancing Model Accuracy: How feature engineering improves performance.

Understanding the Basics of Feature Engineering

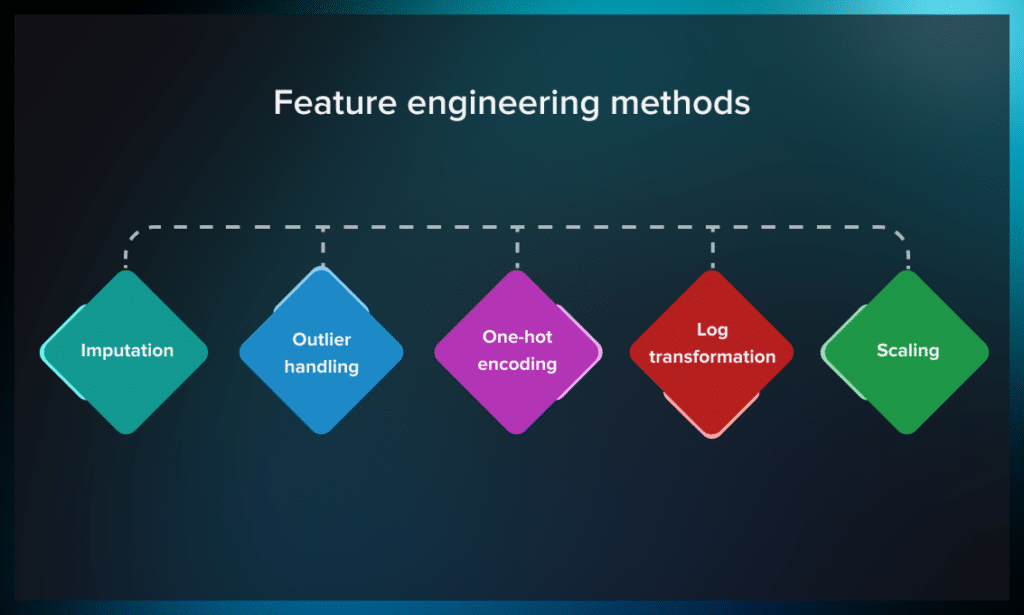

Feature engineering involves creating new features from existing data and selecting the most relevant features for use in predictive models. It’s a critical step that can determine the success or failure of a model. The process includes techniques like binning, encoding, and extraction of new variables. For instance, converting a continuous age variable into age groups (bins) can sometimes improve a model’s performance by simplifying patterns.

1. Domain Knowledge Integration

Effective feature engineering often requires domain-specific knowledge. By understanding the context of the data, data scientists can create more meaningful features that capture the nuances of the specific field, whether it be finance, healthcare, or retail. For example, in healthcare, creating features from raw medical data might require insights into medical conditions and treatments.

2. Feature Engineering Tools

There are various tools and libraries available that facilitate feature engineering, such as Scikit-learn in Python. These tools provide functions for automated feature selection, extraction, and transformation, making the process more efficient and less prone to errors.

- Feature Creation: Techniques for developing new features.

- Feature Selection: Identifying the most impactful variables.

Handling Missing Data

Dealing with missing data is a common challenge in feature engineering. Strategies like imputation (filling missing values with statistical measures) or using algorithms that support missing values are essential. For example, replacing missing values with the median or mode can often yield better model performance than simply discarding missing data.

- Imputation Techniques: Methods for addressing missing data.

- Impact on Model Accuracy: How missing data treatment affects outcomes.

Advanced Imputation Techniques: Beyond basic statistical measures, advanced imputation techniques like K-Nearest Neighbors (KNN) or Multiple Imputation by Chained Equations (MICE) can be used. These methods consider the underlying relationships between features to fill in missing values more accurately.

Impact of Missing Data on Model Robustness: Properly handling missing data is crucial for the robustness of predictive models. Inaccurate imputation can lead to biased or misleading results, affecting the reliability of the model’s predictions.

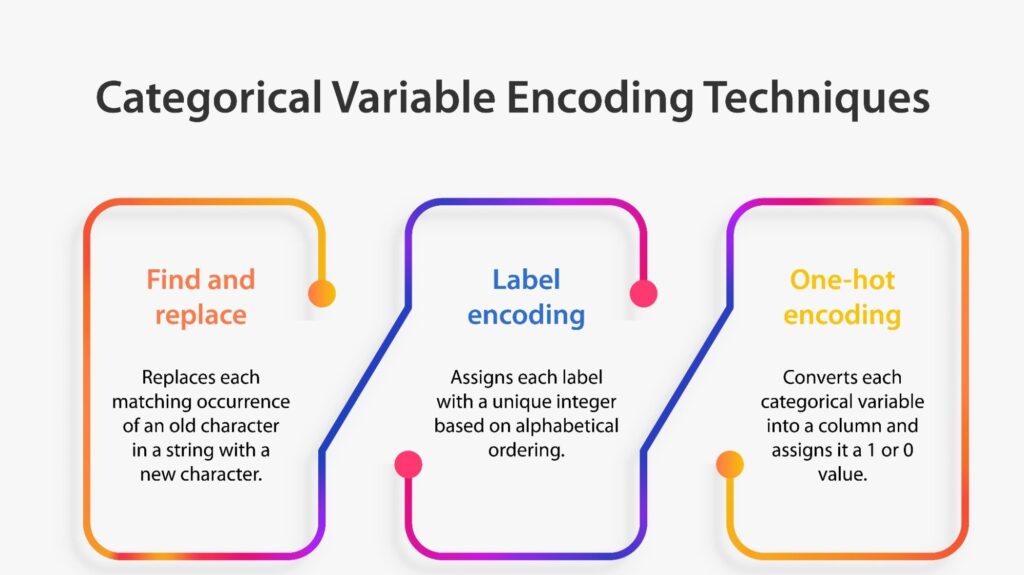

Categorical Data Encoding

Categorical data encoding is vital for converting non-numeric data into a format that machine learning algorithms can understand. Techniques like one-hot encoding or label encoding transform categorical variables into numeric formats, thus making them usable in predictive modeling. For example, encoding a ‘color’ feature with values like ‘red’, ‘blue’, ‘green’ into numerical values is essential for model processing.

- Encoding Methods: Different approaches for categorical data.

- Practical Application: Real-world examples of categorical encoding.

Choosing the Right Encoding Technique

The choice of encoding technique can significantly impact model performance. For instance, one-hot encoding is suitable for nominal data without an inherent order, while ordinal encoding is better for ordinal data where the order matters.

Avoiding the Dummy Variable Trap

When using one-hot encoding, it’s important to avoid the dummy variable trap, where multicollinearity can occur. This can be prevented by dropping one of the encoded columns, as it is redundant.

Feature Scaling and Normalization

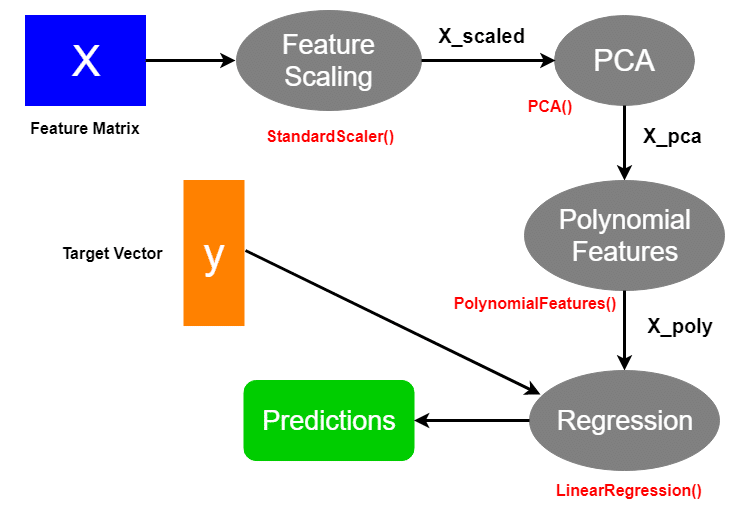

Feature scaling and normalization are crucial when features have different scales. Techniques like Min-Max scaling or Z-score normalization ensure that all features contribute equally to the model’s performance. For instance, scaling features to a range of 0 to 1 can prevent models from being skewed by features with larger scales.

- Normalization vs. Standardization: While normalization rescales features to a range, standardization rescales data to have a mean of zero and a standard deviation of one. The choice between these techniques depends on the model used and the data distribution.

- Robust Scaling for Outliers: In datasets with significant outliers, robust scaling methods, which use median and interquartile ranges, can be more effective than standard min-max scaling or Z-score normalization.

Feature Interaction and Polynomial Features

Creating interaction features (features that are combinations of two or more variables) can uncover complex relationships in the data. Polynomial features, which are features created by raising existing features to a power, can also add complexity and improve model performance. For example, creating a feature that multiplies two existing features can reveal interactions that simple linear models might miss.

- Detecting Interaction Effects: Interaction features can reveal synergistic effects that are not apparent when considering features independently. For instance, in real estate pricing models, the interaction between location and property size might have a significant impact on price.

- Computational Considerations: While polynomial features can add valuable complexity to models, they also increase the computational load. It’s important to balance the benefits of additional features with the increased computational cost and the risk of overfitting.

Conclusion

Feature engineering is an art that requires creativity, intuition, and a deep understanding of the data. By mastering these five essential steps, data scientists and analysts can significantly enhance the performance of their predictive models. As machine learning continues to evolve, feature engineering remains a fundamental skill for anyone looking to extract the most value from their data.

- The Future of Feature Engineering: Emerging trends and technologies.

- Empowering Predictive Models: The ongoing importance of feature engineering.

In the ever-evolving landscape of data science, feature engineering remains a cornerstone of effective predictive modeling. As machine learning algorithms become more sophisticated, the role of feature engineering in enhancing model performance becomes even more critical. The future of feature engineering lies in automating these processes while retaining the creativity and domain expertise that human data scientists bring, ensuring that models are not only technically sound but also contextually relevant.

Reviewed by 1 user

This is truly a good stuff.I appreciate you.