Table of Contents

Introduction

Welcome to our comprehensive guide on data preprocessing techniques, a crucial step in the data analysis process. In this blog, we explore the importance of data cleaning and preparing your data, ensuring it’s ready for insightful analysis. We’ll delve into five key preprocessing techniques that are essential for any data scientist or analyst.

- Focus on Data Preprocessing Techniques: The foundation of effective data analysis.

- Data Cleaning and Preparing Data: Essential steps for accurate results.

Data Cleaning: Removing Inaccuracies and Irregularities

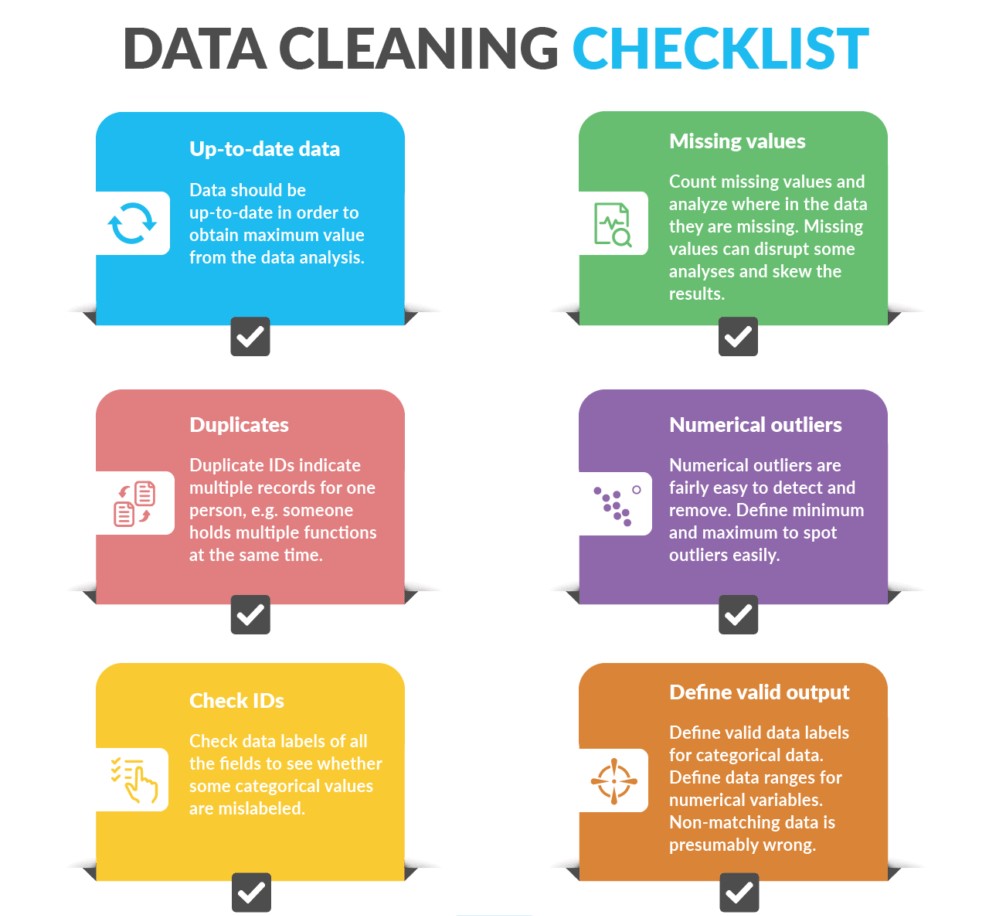

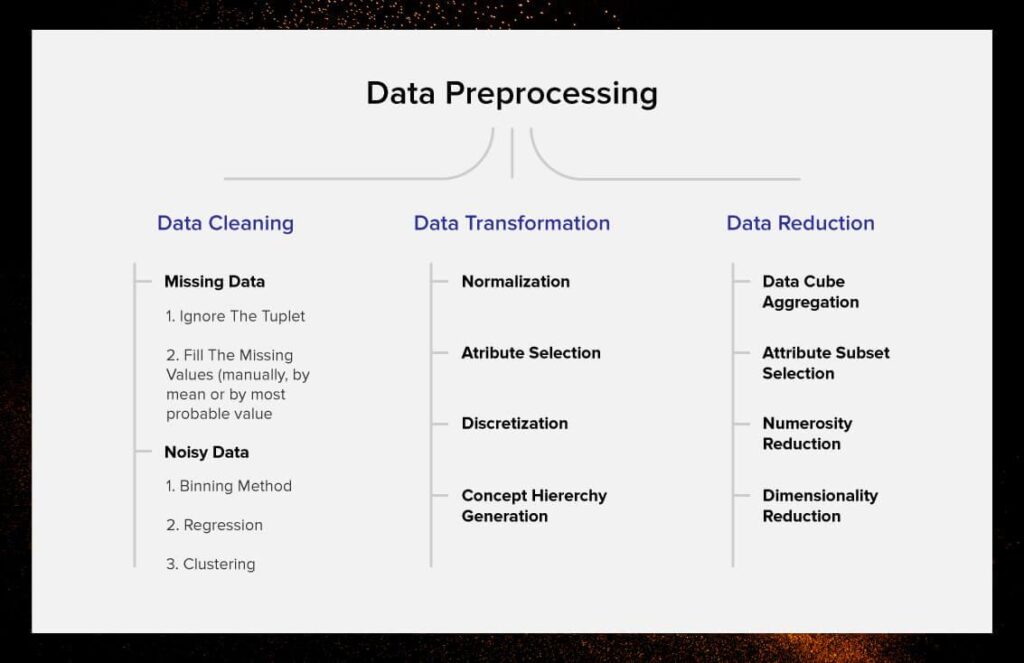

Data cleaning involves identifying and correcting errors and inconsistencies in your data to improve its quality and accuracy. Effective data cleaning not only rectifies inaccuracies but also ensures consistency across datasets.

This process can involve dealing with anomalies, duplicate entries, and irrelevant data that can skew analysis results. In sectors like healthcare or finance, where data accuracy is paramount, meticulous data cleaning can be the difference between accurate diagnostics and financial forecasting, and costly errors.

- Techniques for Data Cleaning: Identifying outliers, handling missing values, and correcting errors.

- Real-world Example: Data cleaning in customer databases for accurate analysis.

Data Transformation: Standardizing and Normalizing Data

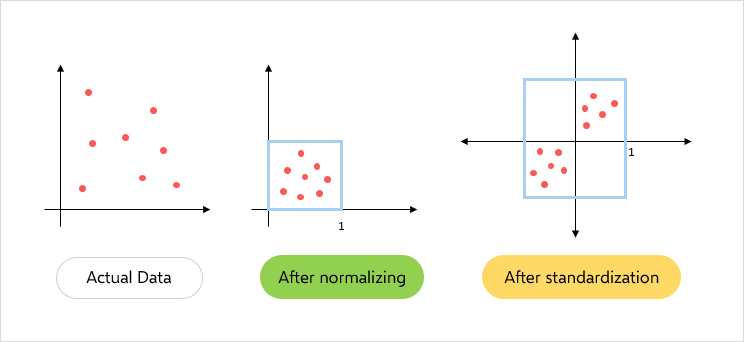

Transforming data into a consistent format is crucial for comparative analysis and to ensure that different datasets can be integrated seamlessly. Data transformation is particularly crucial when dealing with varied data sources, as it ensures that all data is on a comparable scale.

This uniformity is vital for algorithms in machine learning models, where differing data ranges can lead to biased or incorrect outcomes. For instance, in customer segmentation, standardizing income levels and purchase frequencies allows for a more accurate grouping of customer profiles.

- Methods of Transformation: Standardization, normalization, and encoding categorical data.

- Case Study: Transforming sales data from different regions for a unified analysis.

Data Reduction: Simplifying Complex Data

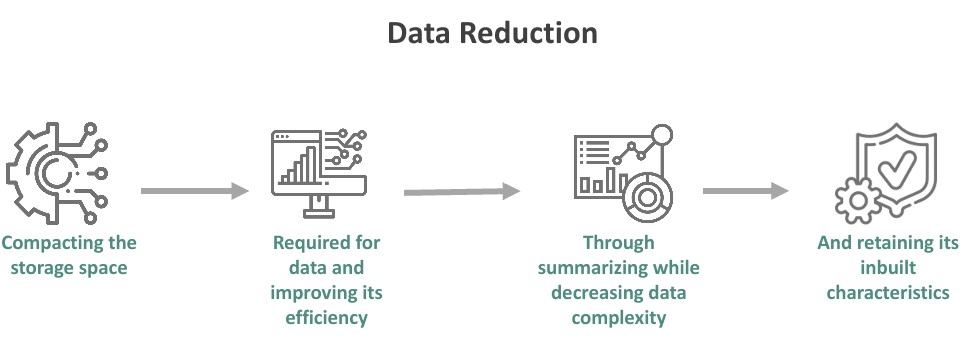

Data reduction techniques help in simplifying complex data, making it more manageable without losing its integrity. Data reduction not only simplifies the analysis but also helps in focusing on the most significant aspects of the data.

Techniques like principal component analysis can reduce the dimensionality of the data while retaining the most important variance. This is particularly useful in fields like genomics, where researchers deal with vast amounts of genetic data but need to focus on specific traits or markers.

- Approaches to Data Reduction: Dimensionality reduction, binning, and aggregation.

- Application: Reducing financial data for trend analysis.

Another aspect of data reduction is the aggregation of data, which involves summarizing detailed data into a more digestible format. For example, in sales analysis, daily transaction data can be aggregated into monthly or quarterly sales figures, making it easier to identify trends and patterns over time.

Feature Selection: Identifying Relevant Variables

Feature selection involves identifying the most relevant variables for analysis, and enhancing the performance and accuracy of your models.

The right feature selection techniques can significantly improve the efficiency and effectiveness of predictive models. By removing irrelevant or redundant features, models become more streamlined and less prone to overfitting. This is especially important in real-time applications like fraud detection, where the speed and accuracy of the model are critical.

Moreover, feature selection aids in interpretability, making models easier to understand and explain. In fields like medical research, where interpretability is as important as accuracy, feature selection helps in identifying the most influential factors in patient outcomes, thereby aiding in more informed medical decision-making.

- Selecting Key Features: Techniques like filter, wrapper, and embedded methods.

- Example: Feature selection in predictive modeling for marketing campaigns.

Data Integration: Combining Data from Multiple Sources

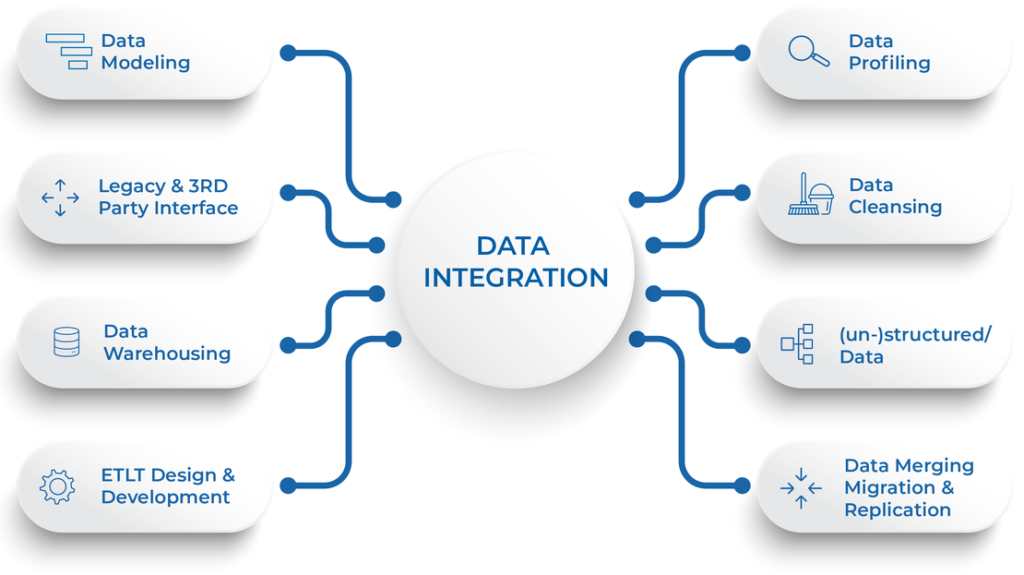

Integrating data from various sources is essential for a comprehensive analysis, providing a more complete view of the dataset.

Data integration involves challenges like dealing with different data formats and ensuring data quality across sources. However, when done correctly, it provides a more comprehensive dataset that can lead to more insightful analyses. For instance, in market research, integrating customer feedback from social media with sales data can provide a more complete picture of customer satisfaction and product performance.

- Integration Techniques: Merging, concatenation, and database integration.

- Real-world Use: Integrating customer data from different platforms for a holistic view.

Another key aspect of data integration is ensuring the integrity and security of the combined data. This is particularly important in sectors like banking or government, where data sensitivity is high. Proper integration techniques ensure that data remains consistent, accurate, and secure, even when sourced from multiple origins.

Conclusion

Data preprocessing is a critical step in the data analysis process, ensuring that your data is clean, consistent, and ready for analysis. By mastering these five preprocessing techniques, you can enhance the quality of your data analysis, leading to more accurate and insightful results.

- The Importance of Data Preprocessing: A cornerstone of successful data analysis.

- Future of Data Analysis: Evolving techniques and tools in data preprocessing.

In summary, mastering data preprocessing techniques is essential for any data professional looking to extract meaningful insights from their data. As the volume and complexity of data continue to grow, these techniques will become even more crucial in the data analysis process. Looking ahead, advancements in automation and AI are likely to further evolve these preprocessing techniques, making them more efficient and accessible to a broader range of users.

Leave feedback about this