Introduction

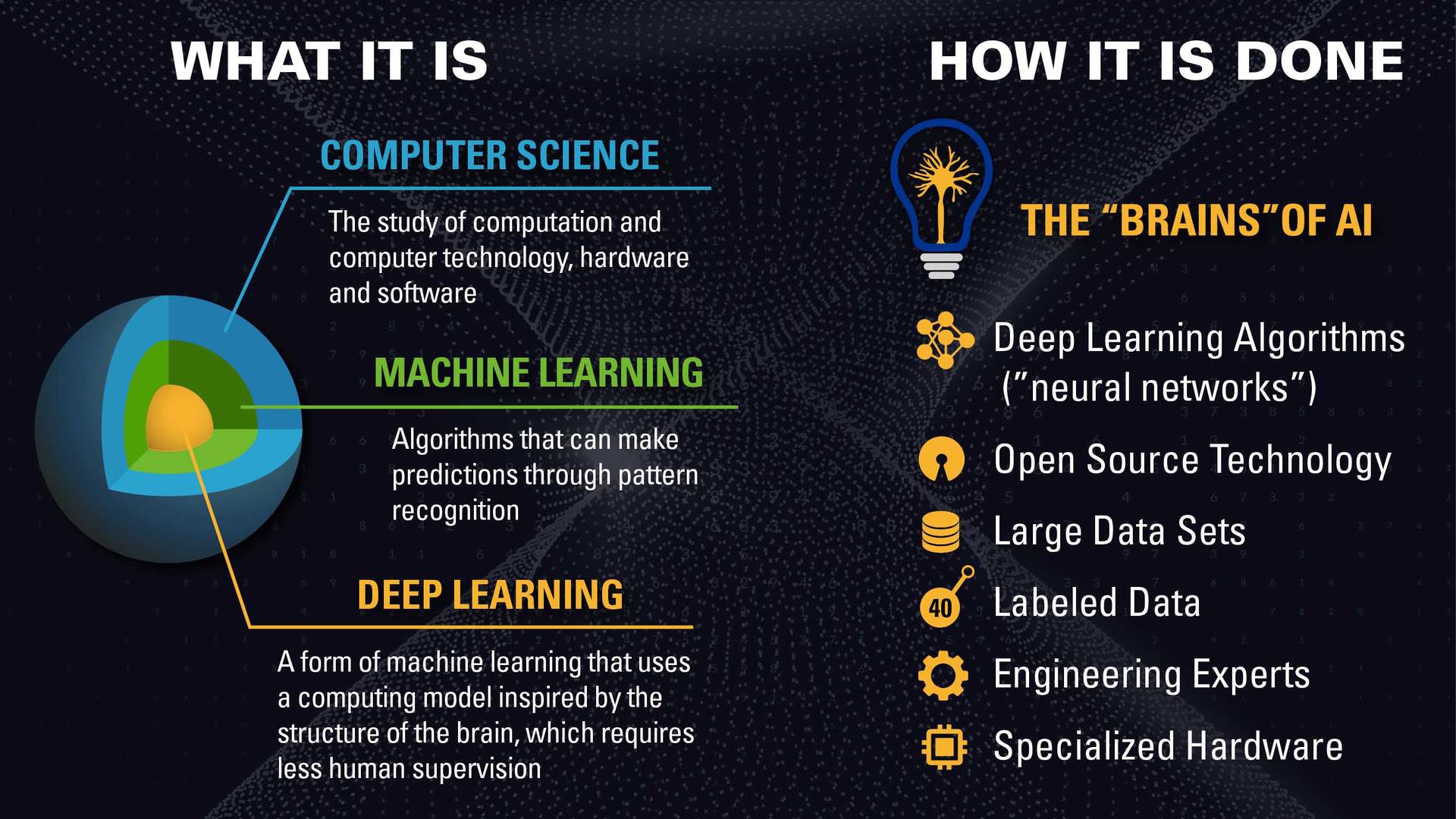

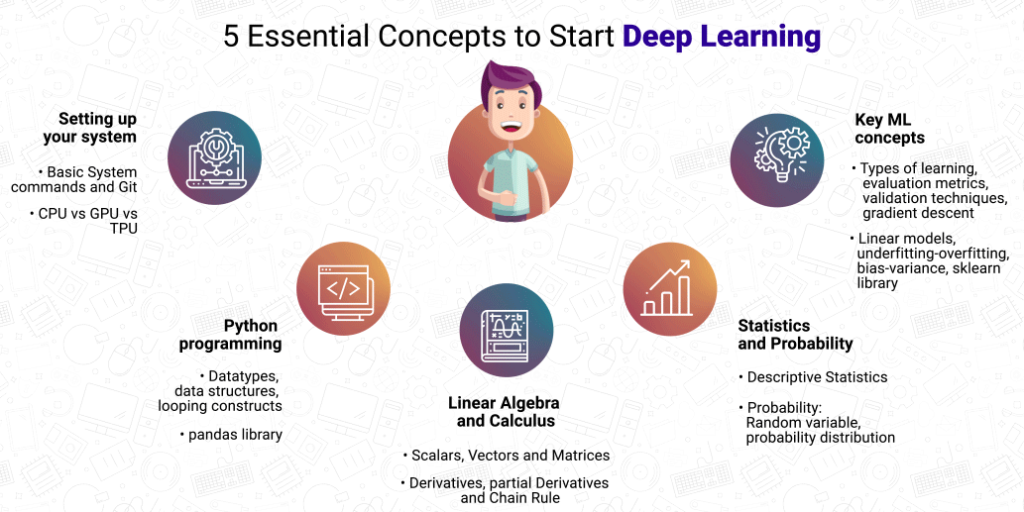

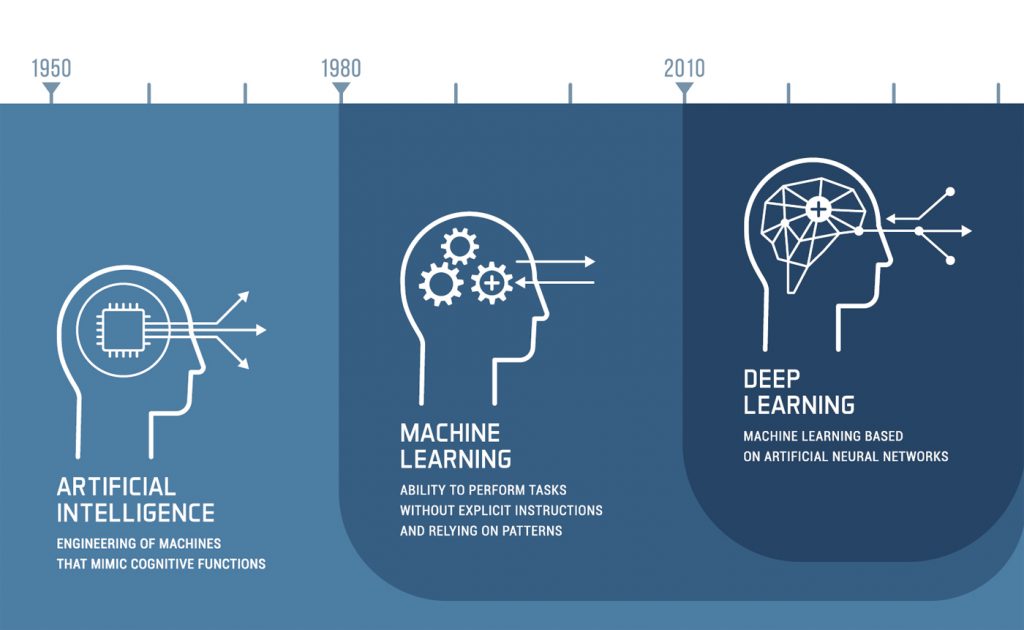

Welcome to the fascinating world of Deep Learning, a subset of machine learning that’s transforming how we interact with technology. This blog, will guide you through the basics of neural networks and their diverse applications. We’ll explore how these advanced algorithms mimic the human brain to solve complex problems, making significant impacts across various industries.

Our focus keyword, ‘Deep Learning’, is central to this exploration. It’s a field that’s not just about programming or data – it’s about understanding and leveraging the power of neural networks to create intelligent systems. As we delve into this topic, we’ll use real-time examples to illustrate the practical applications of deep learning in everyday life.

Understanding Neural Networks

Neural Networks are the building blocks of deep learning. They are inspired by the structure and function of the human brain, consisting of interconnected nodes or ‘neurons’ that process and transmit information. These networks can learn and make intelligent decisions based on the data they receive.

- Components: Layers of neurons, including input, hidden, and output layers.

- Function: Each neuron processes input data, passes it through an activation function, and transmits the output to the next layer.

Perceptron: The Foundation of Neural Networks

A perceptron is a type of artificial neuron that forms the basic unit of a neural network. It was developed in the 1950s and 1960s by Frank Rosenblatt and is considered one of the simplest types of feedforward neural networks.

Components and Functioning of a Perceptron:

- Input Values (Inputs): A perceptron receives multiple input signals, each representing a different feature of the data being processed.

- Weights: Each input value is assigned a weight that signifies its importance. These weights are adjustable and are tuned during the learning process.

- Summation Function: The perceptron computes a weighted sum of its inputs, which is essentially the dot product of the input values and their corresponding weights.

- Activation Function: The weighted sum is then passed through an activation function. The most basic form of an activation function in a perceptron is a step function, which outputs a binary result (often 1 or 0) to indicate whether the neuron is ‘activated’ or not.

- Output: The output of the perceptron is used for further processing or as a part of the final output of the neural network.

Learning Process:

- Training: During training, the perceptron adjusts its weights based on the errors in its predictions. This process is known as the Perceptron Learning Rule, which is a form of supervised learning.

- Error Correction: If the perceptron’s prediction is incorrect, the weights are adjusted in the direction that reduces the error. This adjustment is proportional to the input value and the error magnitude.

Real-time example: Image recognition systems use neural networks to identify and classify objects within images, learning from vast datasets of labeled images.

Key Concepts in Deep Learning

This involves several key concepts that are essential for understanding how neural networks function. These include backpropagation, which is used for training networks by adjusting weights based on errors, and convolutional neural networks (CNNs), which are particularly effective for image and video processing.

- Backpropagation: A method for fine-tuning the weights of a neural network based on the error rate obtained in the previous epoch.

- Convolutional Neural Networks (CNNs): A type of deep neural network especially effective in processing structured array data like images.

Real-time example: Self-driving cars use CNNs to process and interpret visual information from their surroundings for navigation and decision-making.

Applications of Deep Learning

It has a wide range of applications, revolutionizing industries from healthcare to finance. Its ability to process large volumes of data and identify patterns makes it invaluable for tasks like predictive analysis, natural language processing, and more.

- Healthcare: Used for disease detection and medical imaging analysis.

- Finance: Applied in algorithmic trading and fraud detection.

- Natural Language Processing: Powers chatbots and translation services.

Real-time example: In healthcare, deep learning algorithms analyze medical images to detect diseases like cancer more accurately and quickly than traditional methods.

Challenges and Future

While deep learning offers immense potential, it also faces challenges such as the need for large datasets, high computational power, and the risk of model overfitting. However, the future is promising, with ongoing research focusing on overcoming these challenges and enhancing the efficiency and applicability of neural networks.

Real-time example: Researchers are developing more efficient neural network models that require less data and computational resources, making deep learning more accessible and practical for various applications.

Conclusion

In conclusion, Deep Learning and Neural Networks represent a significant leap in the field of artificial intelligence, offering powerful tools for transforming vast amounts of raw data into meaningful insights. As we continue to advance in technology and research, the applications and capabilities of deep learning will undoubtedly expand, opening new frontiers in data analysis and problem-solving.

This comprehensive guide has provided an in-depth look into the fundamentals of deep learning, from neural networks to their wide-ranging applications, equipping you with the knowledge to understand and leverage this transformative technology.