Introduction to Data Engineering

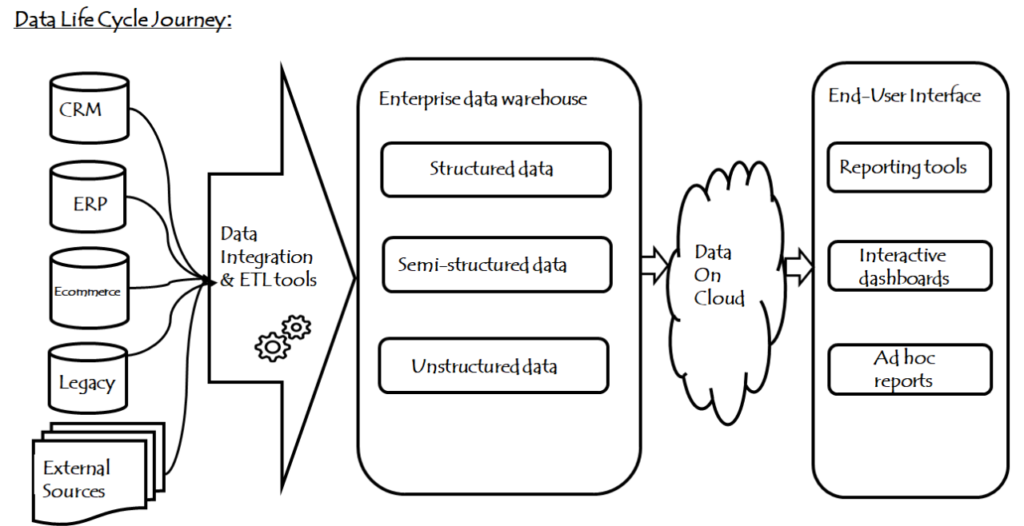

Data Engineering is the process of designing, building, and managing the infrastructure and systems that enable data collection, storage, processing, and analysis. It serves as the foundation for data science and analytics, ensuring that data is accessible, reliable, and ready for analysis. As businesses increasingly rely on data-driven decision-making, the demand for skilled data engineers has grown significantly.

The role of data engineering is critical in transforming raw data into valuable insights that drive business strategies. By implementing robust data pipelines, data engineers ensure that data flows seamlessly from various sources to data warehouses and analytics platforms. This continuous and efficient data movement enables data scientists to focus on extracting meaningful patterns and insights without worrying about data quality or availability. With the advent of big data and advanced analytics, the scope of this field continues to expand, making it an indispensable part of modern data ecosystems.

Fundamentals of Data Engineering

Understanding the fundamentals of data engineering is crucial for anyone looking to enter this field. Key concepts include:

- Data Collection: Gathering data from various sources such as databases, APIs, and IoT devices.

- Data Storage: Storing data in databases, data warehouses, and data lakes.

- Data Processing: Transforming raw data into a usable format through cleaning, normalization, and transformation.

- Data Pipelines: Automating the flow of data from collection to storage and processing.

- Data Integration: Combining data from different sources to provide a unified view.

Mastering the core concepts of engineering ensures a seamless and efficient workflow in handling data. Each component, from data collection to integration, is crucial in creating a robust data infrastructure. Professionals can build scalable systems that support comprehensive analytics and drive informed decision-making by focusing on these fundamentals. A solid understanding of these basics lays the groundwork for advanced techniques and tools, making it indispensable for anyone aspiring to excel in this field.

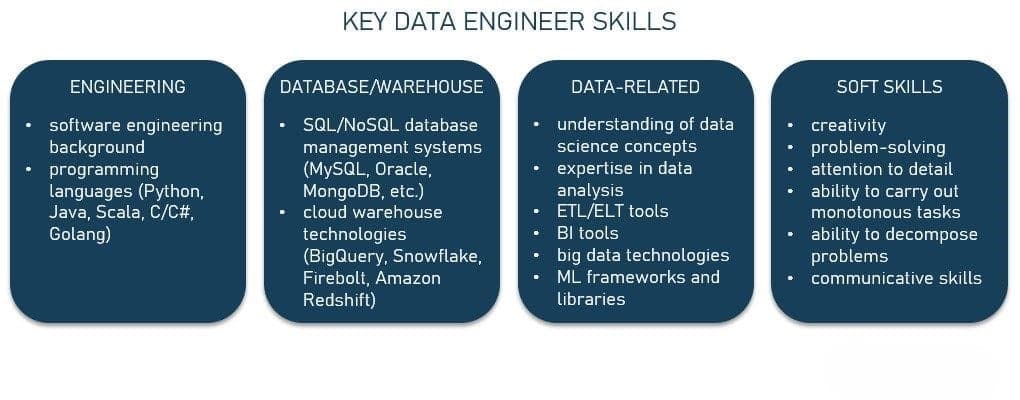

Key Data Engineer Skills

To excel in data analytics & engineering, one needs a diverse set of skills that encompass both technical and analytical capabilities. These skills include:

- Programming Languages: Proficiency in languages such as Python, Java, and SQL is essential for data manipulation and automation.

- Database Management: Understanding of relational (SQL) and non-relational (NoSQL) databases.

- ETL Processes: Knowledge of Extract, Transform, and Load (ETL) processes to move data between systems.

- Big Data Technologies: Familiarity with big data tools like Apache Hadoop, Apache Spark, and Kafka.

- Cloud Platforms: Experience with cloud services such as AWS, Google Cloud, and Azure for data storage and processing.

- Data Modeling: Ability to design data models that support efficient data storage and retrieval.

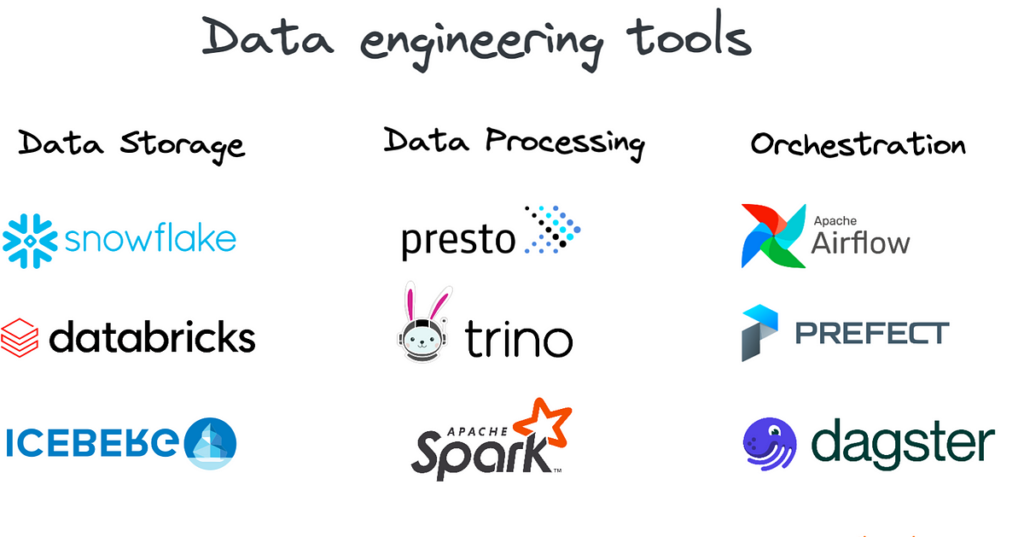

Essential Data Engineering Tools

Data engineering relies on a variety of tools to manage and process data. Some of the essential tools include:

- Apache Hadoop: A framework that allows for distributed processing of large data sets across clusters of computers.

- Apache Spark: An open-source unified analytics engine for big data processing, with built-in modules for streaming, SQL, machine learning, and graph processing.

- Kafka: A distributed streaming platform used for building real-time data pipelines and streaming applications.

- Airflow: A platform to programmatically author, schedule, and monitor workflows.

- Snowflake: A cloud data platform that provides a data warehouse built for the cloud.

- Tableau: A data visualization tool that helps create interactive and shareable dashboards.

Data Engineering vs Data Science

While data engineering and data science are closely related, they serve different purposes. Data engineers focus on building the infrastructure and tools needed to collect, store, and process data. In contrast, data scientists analyze this data to extract insights and build predictive models. Both roles are essential in the data ecosystem, and their collaboration is crucial for successful data-driven projects.

| Aspect | Data Engineering | Data Science |

|---|---|---|

| Primary Focus | Building data infrastructure and tools | Analyzing data to extract insights and build predictive models |

| Key Responsibilities | Data collection, storage, processing, and pipeline automation | Data analysis, statistical modeling, and predictive analytics |

| Tools and Technologies | Apache Hadoop, Apache Spark, Kafka, SQL, NoSQL databases | Python, R, TensorFlow, scikit-learn, data visualization tools |

| Data Handling | Ensuring data quality, integrity, and accessibility | Cleaning, exploring, and interpreting data |

| Collaboration | Provides clean, organized data for data scientists | Utilizes data infrastructure set up by data engineers |

| Outcome | Robust data infrastructure enabling efficient data workflows | Insights and models driving business decisions and strategies |

| Role in Data Projects | Lays the groundwork for data processing and storage | Focuses on deriving actionable insights from processed data |

Data Engineering Jobs and Career Paths

This field offers a range of career opportunities, from entry-level positions to senior roles. Common job titles include:

- Data Engineer: Responsible for building and maintaining data pipelines and databases.

- Data Architect: Designs the architecture for data systems and ensures scalability and efficiency.

- ETL Developer: Specializes in developing ETL processes to move data between systems.

- Big Data Engineer: Focuses on handling large volumes of data using big data technologies.

- Machine Learning Engineer: Combines data engineering skills with machine learning to build and deploy models.

Best Practices in Data Engineering

To ensure the success of data engineering projects, it is crucial to adhere to best practices. These practices help maintain data integrity, optimize performance, enhance security, and streamline workflows. By following these guidelines, data engineers can build robust and efficient data systems that support effective data management and analysis. Here are some key best practices to consider:

- Ensure Data Quality: Implement rigorous data validation and cleaning processes to maintain data integrity.

- Automate Processes: Use automation tools to streamline data workflows and reduce manual intervention.

- Optimize Performance: Continuously monitor and optimize data pipelines and storage systems for performance.

- Secure Data: Implement robust security measures to protect sensitive data from unauthorized access.

- Document Processes: Maintain comprehensive documentation for all data engineering processes and systems.

Real-World Applications

Data engineering plays a crucial role in various industries, enabling them to harness the power of data. Some real-world applications include:

- E-commerce: Building recommendation engines and optimizing inventory management through data analysis.

- Healthcare: Integrating patient data from various sources to improve diagnosis and treatment.

- Finance: Developing fraud detection systems and risk management tools.

- Manufacturing: Monitoring production processes and predicting maintenance needs through IoT data.

- Telecommunications: Enhancing network performance and customer experience through data analysis.

Challenges in Data Engineering

Despite its numerous benefits, data engineering is not without its challenges. One major issue is data complexity, as handling diverse data types and sources can be intricate and demanding. Additionally, ensuring that data systems can scale efficiently with the ever-growing volumes of data is another significant hurdle. The integration of data from multiple sources and varying formats also poses substantial difficulties, often requiring sophisticated solutions to harmonize the data flow seamlessly.

Moreover, minimizing latency to enable real-time analytics is a critical challenge that data engineers face. Ensuring quick data processing times is vital for timely insights, especially in dynamic environments. Security is another paramount concern, as protecting data from breaches and maintaining compliance with stringent regulations are essential to upholding data integrity and trust.

Key Challenges:

- Data Complexity: Handling diverse data types and sources can be intricate and demanding.

- Scalability: Ensuring data systems can scale efficiently with growing data volumes.

- Integration: Harmonizing data from multiple sources and varying formats.

- Latency: Minimizing data processing delays to enable real-time analytics.

- Security: Protecting data from breaches and ensuring regulatory compliance.

Future Trends

The field of data engineering is continuously evolving, with several trends shaping its future:

- AI and Machine Learning Integration: Leveraging AI and machine learning to automate data processing tasks and improve efficiency.

- Data Mesh Architecture: Decentralizing data ownership and enabling cross-functional teams to manage their data.

- Real-Time Data Processing: Increasing focus on real-time analytics to enable faster decision-making.

- DataOps: Applying DevOps principles to data engineering to improve collaboration and streamline workflows.

- Edge Computing: Processing data closer to its source to reduce latency and improve performance.

Conclusion

Data engineering is a critical discipline that underpins the data-driven decision-making processes of modern businesses. By understanding key concepts, acquiring essential skills, and leveraging the right tools, data engineers can build robust data infrastructures that enable organizations to harness the full potential of their data. As the field continues to evolve, staying updated with the latest trends and best practices will be crucial for success.

FAQ’s

What skills are required to become a data engineer?

Key skills for data engineers include programming (Python, Java, SQL), database management, ETL processes, big data technologies (Hadoop, Spark), cloud platforms (AWS, Google Cloud, Azure), and data modeling.

What are some essential tools for data engineering?

Essential tools include Apache Hadoop, Apache Spark, Kafka, Airflow, Snowflake, and Tableau. These tools help manage and process data efficiently.

How does data engineering differ from data science?

Data engineering focuses on building the infrastructure and tools needed for data collection, storage, and processing, while data science focuses on analyzing data to extract insights and build predictive models. Both roles are complementary and essential for data-driven projects.

What are some common data engineering jobs?

Common data engineering jobs include Data Engineer, Data Architect, ETL Developer, Big Data Engineer, and Machine Learning Engineer.

What are the future trends in data engineering?

Future trends include AI and machine learning integration, data mesh architecture, real-time data processing, DataOps, and edge computing. These advancements will shape the field and improve data management processes.

Your blog is a treasure trove of knowledge! I’m constantly amazed by the depth of your insights and the clarity of your writing. Keep up the phenomenal work!