Data pipelines are the backbone of modern data-driven organizations in the digital age. They enable the seamless flow of data from various sources to destinations, transforming and processing it along the way. This blog explores data pipelines’ essential tools and architecture, highlighting their significance, components, and real-world applications.

Introduction to Data Pipelines

Data pipelines are essential frameworks for transferring data between different systems, enabling businesses to gather, process, and analyze vast amounts of information efficiently. With the exponential growth of data, organizations must leverage effective data pipelines to maintain their competitive edge.

The primary goal of a data pipeline is to automate and streamline the data processing workflow, ensuring data integrity and availability for analytics and decision-making. This automation minimizes manual intervention, reducing errors and enhancing productivity.

Understanding Data Pipeline Architecture

Data pipeline architecture encompasses the design and structure that facilitate data flow through various stages, from collection to consumption. It involves multiple processes such as data extraction, transformation, and loading (ETL).

A robust data pipeline architecture includes the following key elements:

- Data Sources: Origins of data, such as databases, APIs, or sensors.

- Data Ingestion: Methods to collect and import data from sources.

- Data Processing: Transforming raw data into a usable format.

- Data Storage: Storing processed data in data lakes, warehouses, or databases.

- Data Access: Enabling users and applications to retrieve and utilize data.

A well-designed data pipeline architecture ensures that data moves smoothly and efficiently through each stage, minimizing bottlenecks and delays. This seamless flow is crucial for supporting real-time analytics and decision-making. Moreover, by implementing a flexible and scalable architecture, organizations can easily adapt to changing data needs and incorporate new technologies, ensuring that the data pipeline remains robust and future-proof.

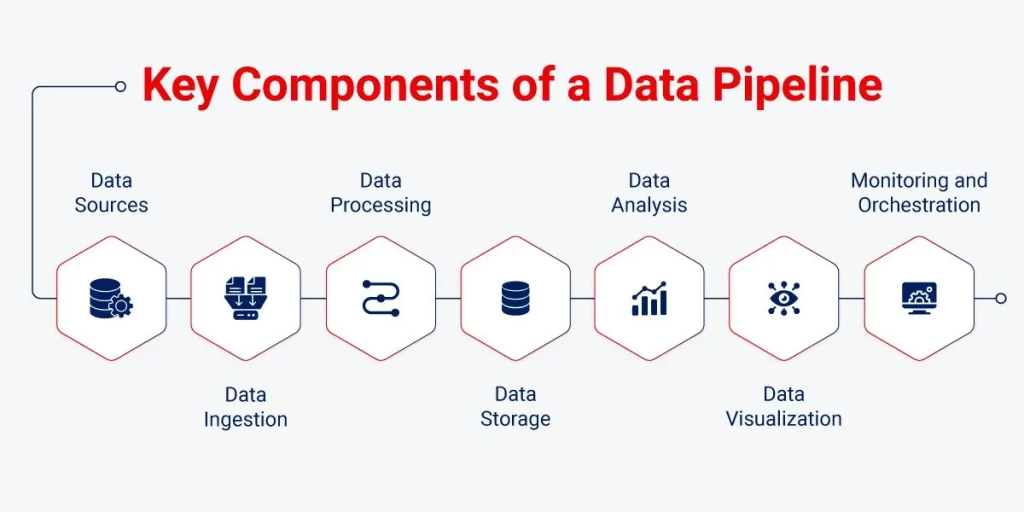

Key Components of a Data Pipeline

A typical data pipeline comprises several components that work together to ensure seamless data flow:

- Data Ingestion: This component is responsible for collecting data from various sources. Tools like Apache Kafka and AWS Kinesis are often used to facilitate real-time data ingestion.

- Data Transformation: Transforming raw data into a structured format is crucial for analysis. Technologies like Apache Spark and Apache Beam provide powerful capabilities for data transformation.

- Data Storage: Storing processed data efficiently is essential for quick retrieval. Data storage solutions like Amazon S3, Google Cloud Storage, and Hadoop Distributed File System (HDFS) are commonly used.

- Data Orchestration: Orchestrating the entire data pipeline ensures that tasks are executed in the correct sequence. Tools like Apache Airflow and AWS Step Functions manage workflows and dependencies.

Popular Data Pipeline Tools

Several tools and technologies facilitate the development and management of data pipelines. Here are some of the most popular ones:

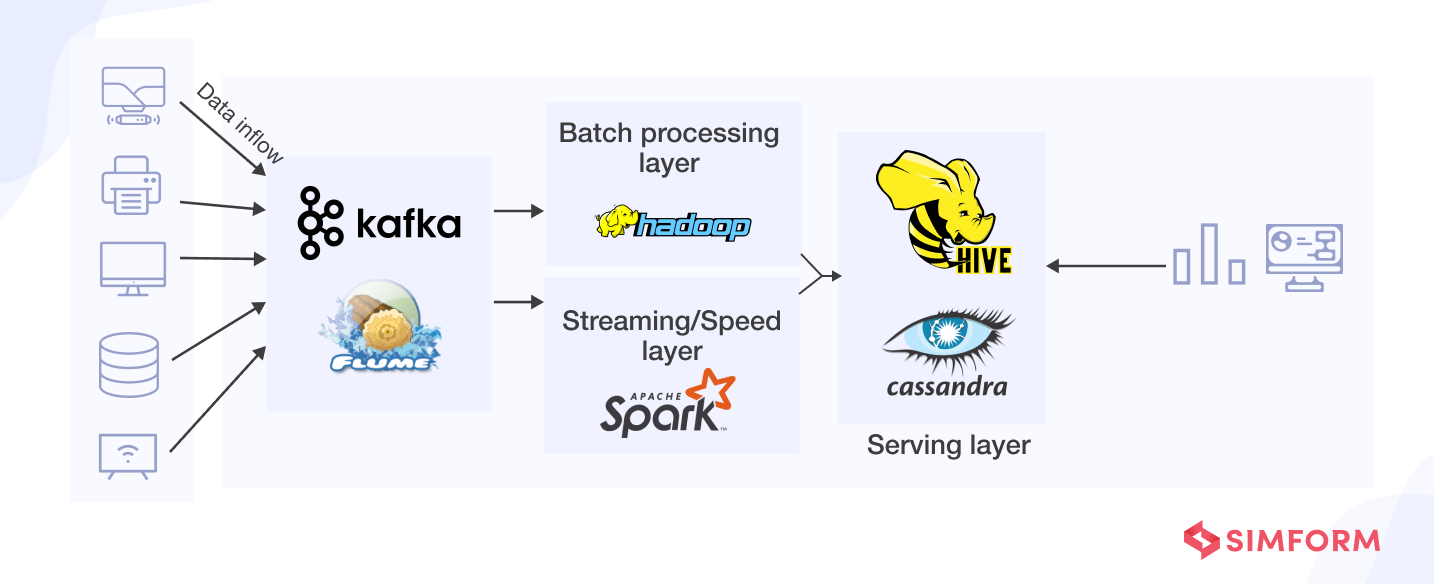

4.1 Apache Kafka

Apache Kafka is a distributed streaming platform that enables real-time data ingestion and processing. It is widely used for building scalable data pipelines and event-driven applications.

4.2 Apache Spark

Apache Spark is a powerful open-source data processing engine that supports batch and stream processing. Its ability to handle large-scale data processing makes it a preferred choice for data transformation tasks.

4.3 Apache Airflow

Apache Airflow is an open-source platform for orchestrating complex workflows and data pipelines. It allows developers to define tasks and their dependencies as code, providing flexibility and scalability.

4.4 AWS Glue

AWS Glue is a fully managed ETL service that simplifies data preparation and transformation. It integrates seamlessly with other AWS services, making it ideal for building data pipelines on the cloud.

Building an Effective Data Pipeline

Building an effective data pipeline is essential for ensuring that data is efficiently collected, processed, and delivered to end-users. A well-structured pipeline not only facilitates seamless data flow but also enhances the quality and reliability of the data. The process begins with defining clear requirements, which involves understanding the sources of data, the specific processing needs, and the expectations of end-users.

By setting clear goals from the outset, organizations can ensure that their data pipeline meets all necessary demands and functions optimally. Choosing the right tools and designing a robust architecture are critical steps in constructing an effective data pipeline. Selecting tools that match the volume and complexity of data can significantly impact the pipeline’s performance and scalability.

Once the architecture is in place, implementing data quality checks is essential to maintain data integrity. Regular monitoring and optimization ensure that the pipeline continues to perform efficiently and can adapt to growing data needs, enabling organizations to derive maximum value from their data assets.

To build an effective data pipeline, consider the following steps:

- Define Requirements: Understand the data sources, processing needs, and end-user requirements.

- Choose the Right Tools: Select tools that align with your data volume, complexity, and budget.

- Design the Architecture: Plan the data flow, transformation processes, and storage solutions.

- Implement Data Quality Checks: Ensure data accuracy and consistency through validation and cleansing.

- Monitor and Optimize: Continuously monitor pipeline performance and optimize for efficiency and scalability.

Real-world applications of Data Pipelines

Data pipelines are utilized across various industries to drive innovation and efficiency:

- Finance: Automated data pipelines streamline transaction processing, fraud detection, and financial reporting.

- Healthcare: Pipelines enable real-time patient data monitoring, improving treatment outcomes and operational efficiency.

- E-commerce: Data pipelines enhance customer experience by personalizing recommendations and optimizing inventory management.

- IoT: Real-time data pipelines process sensor data for predictive maintenance and smart city applications.

Challenges in Data Pipeline Implementation

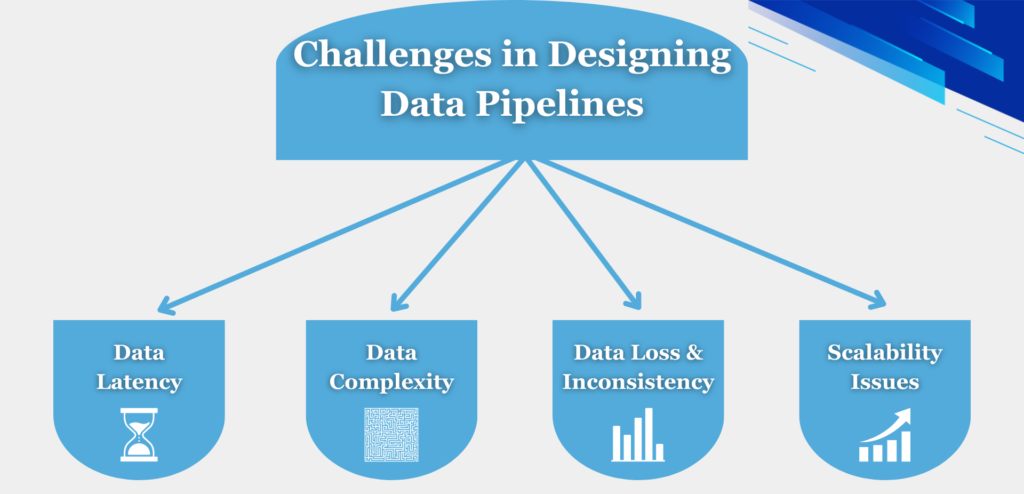

Implementing data pipelines involves more than just the technical setup; it requires a strategic approach to manage the complexities of modern data ecosystems. One of the primary challenges is ensuring the pipeline’s adaptability to handle evolving business needs and technological advancements.

As organizations expand and integrate new data sources, the pipeline must be flexible enough to incorporate these changes without extensive reconfiguration. Moreover, maintaining data consistency and reliability across the pipeline is critical to avoid discrepancies that could lead to faulty analytics and decision-making. Organizations must also balance the need for speed with accuracy, ensuring that rapid data processing does not compromise the integrity of the information.

Effective collaboration between data engineers, IT professionals, and business stakeholders is essential to address these challenges and create a data pipeline that supports organizational goals.

Implementing data pipelines can be challenging due to:

- Data Complexity: Managing diverse data formats and sources requires robust integration capabilities.

- Scalability: Ensuring the pipeline can handle increasing data volumes without performance degradation.

- Latency: Minimizing delays in data processing to enable real-time insights.

- Security: Protecting sensitive data from unauthorized access and breaches.

Best Practices for Data Pipeline Management

To ensure successful data pipeline management, follow these best practices:

- Automate Processes: Use automation to streamline workflows and reduce manual intervention.

- Implement Robust Monitoring: Continuously monitor pipeline performance to detect and resolve issues quickly.

- Prioritize Data Quality: Establish data validation and cleansing processes to maintain data integrity.

- Ensure Scalability: Design pipelines that can scale with your data needs and business growth.

- Enhance Security: Implement encryption and access controls to protect sensitive data.

Future Trends in Data Pipeline Technology

The future of data pipeline technology is shaped by several emerging trends:

- AI and Machine Learning Integration: Leveraging AI to automate data processing and improve pipeline efficiency.

- Real-Time Analytics: Increasing demand for real-time data insights will drive the adoption of streaming data pipelines.

- Serverless Architectures: Utilizing serverless technologies to reduce operational overhead and enhance scalability.

DataOps: Applying DevOps principles to data pipeline management for improved collaboration and agility.

FAQ’s

What is a data pipeline?

A data pipeline is a series of processes that automate the movement and transformation of data from one system to another, enabling efficient data integration and analysis.

What are the key components of a data pipeline?

Key components include data ingestion, transformation, storage, and orchestration tools.

How do data pipelines benefit businesses?

Data pipelines streamline data processing, improve data quality, and enable real-time insights, driving informed decision-making and operational efficiency.

What are the main 3 stages in a data pipeline?

The main three stages in a data pipeline are:

Data Ingestion: This is the initial stage where raw data is collected from various sources such as databases, APIs, sensors, and external files. The goal is to gather all relevant data needed for processing and analysis.

Data Transformation: In this stage, the raw data is processed, cleaned, and transformed into a format suitable for analysis. This involves data cleansing, normalization, aggregation, and any necessary conversions to ensure data quality and consistency.

Data Storage and Access: The final stage involves storing the transformed data in a data warehouse, data lake, or database. This ensures that the data is readily accessible for analysis and reporting by end-users and applications.