Introduction to Data Ingestion

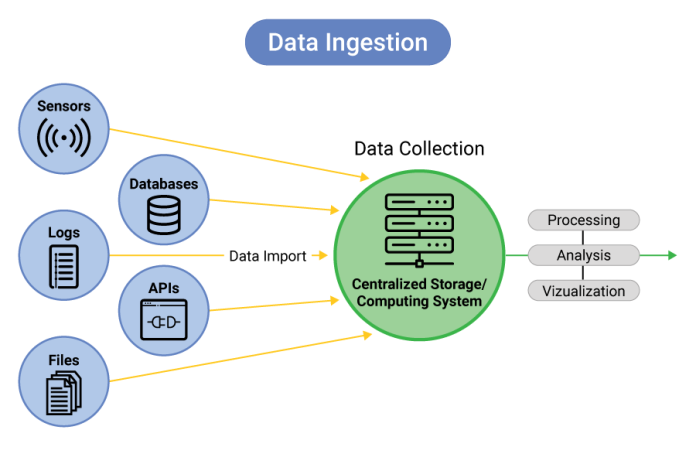

Data ingestion is collecting, importing, and processing data for use or storage in a database or data warehouse. It forms the foundation of any data-driven strategy, allowing organizations to access, manipulate, and analyze large volumes of data efficiently. In the era of big data, data ingestion is crucial for businesses to keep pace with the rapidly growing amount of information generated daily. Effective data ingestion ensures that data is not only accessible but also ready to be transformed into valuable insights that can drive strategic decision-making and enhance operational efficiency.

Adding to this, data ingestion is the first step in the data lifecycle, paving the way for subsequent data processing, analysis, and visualization. It involves different methods such as batch processing, real-time streaming, or a combination of both, depending on the organization’s requirements. As data continues to become the backbone of modern enterprises, optimizing data ingestion processes is essential for achieving seamless data management and improved business outcomes.

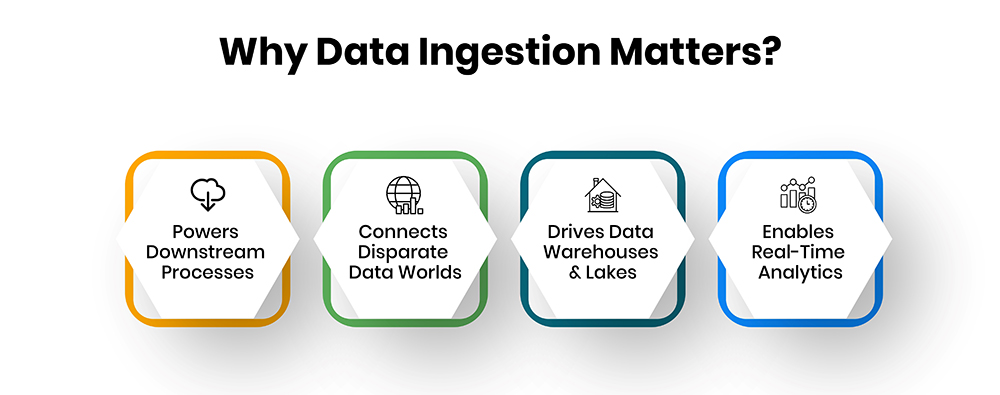

Why Data Ingestion Matters

Data ingestion is critical to any organization’s data strategy because it ensures that valuable data from various sources is collected and made available for analysis. By automating the data ingestion process, businesses can reduce the risk of errors, increase efficiency, and maintain data integrity. This, in turn, leads to more accurate analysis, better decision-making, and a competitive advantage in the market.

Moreover, efficient data ingestion supports real-time analytics, enabling businesses to react quickly to market changes, customer behavior, or operational issues. With the right data ingestion strategy, companies can maintain a continuous flow of fresh data, ensuring that the insights generated are relevant and up-to-date. This capability is particularly crucial for finance, healthcare, and retail industries, where timely and accurate information is vital for success.

Key Reasons Why Data Ingestion Matters:

- Real-Time Decision Making: Enables quick reaction to new data, facilitating timely decision-making.

- Data Consistency: Ensures that data from various sources is consistent, accurate, and ready for analysis.

- Scalability: Supports the handling of large volumes of data, crucial for growing businesses.

- Operational Efficiency: Automates data collection and processing, reducing manual workload and errors.

- Data-Driven Insights: Provides a foundation for advanced analytics, machine learning, and AI applications.

Key Components of Data Ingestion

The key components of data ingestion ensure that data is collected, processed, and stored effectively, laying a solid foundation for data analysis. Each component plays a crucial role in the ingestion process, helping organizations manage and utilize their data more effectively.

Understanding the key components of data ingestion is essential for implementing effective data strategies. These components include:

- Data Sources: Where the data originates, such as databases, APIs, IoT devices, social media platforms, and cloud storage.

- Data Collection: Methods for gathering data, which may involve scraping, querying, or streaming data from various sources.

- Data Transformation: The process of converting raw data into a standardized format suitable for analysis, including cleaning, filtering, and enriching data.

- Data Loading: Storing processed data into a data warehouse, data lake, or other storage solutions for further analysis.

Data ingestion workflows can be tailored to fit the specific needs of a business, incorporating various tools and techniques to handle data from diverse sources. By focusing on these key components, companies can ensure that their data ingestion process is robust and capable of supporting their broader data management goals.

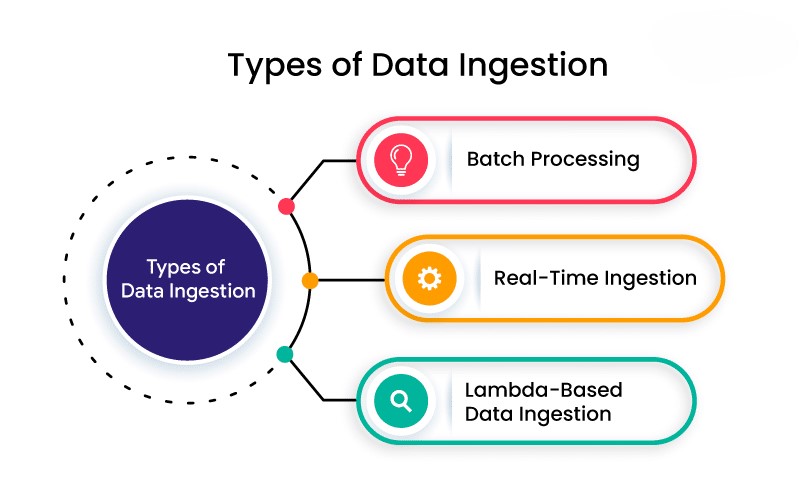

Types of Data Ingestion Processes

Before diving into the different types of data ingestion processes, it’s important to understand that data ingestion is not a one-size-fits-all solution. Different use cases require different methods, and the choice of process often depends on the nature of the data and the specific requirements of the organization. Broadly, data ingestion processes can be categorized into two main types: batch processing and real-time processing. Each has its strengths and applications, making them suitable for different scenarios.

Batch processing involves collecting and storing data in large batches before processing it. This method is useful when immediate data analysis is not required, allowing organizations to process data at scheduled intervals. On the other hand, real-time processing continuously captures and processes data as it is generated, enabling immediate insights and action. This approach is essential for use cases where timely information is critical, such as fraud detection or live monitoring of systems.

Batch Processing

Batch processing involves collecting data over a period and then processing it as a single batch. This method is suitable for scenarios where real-time data processing is not critical. Batch processing is commonly used in financial reporting, customer data analysis, and offline data processing.

Real-Time Processing

Real-time processing, or streaming data ingestion, involves continuously collecting and processing data as it becomes available. This approach is essential for applications that require immediate responses, such as fraud detection, monitoring IoT devices, and real-time analytics.

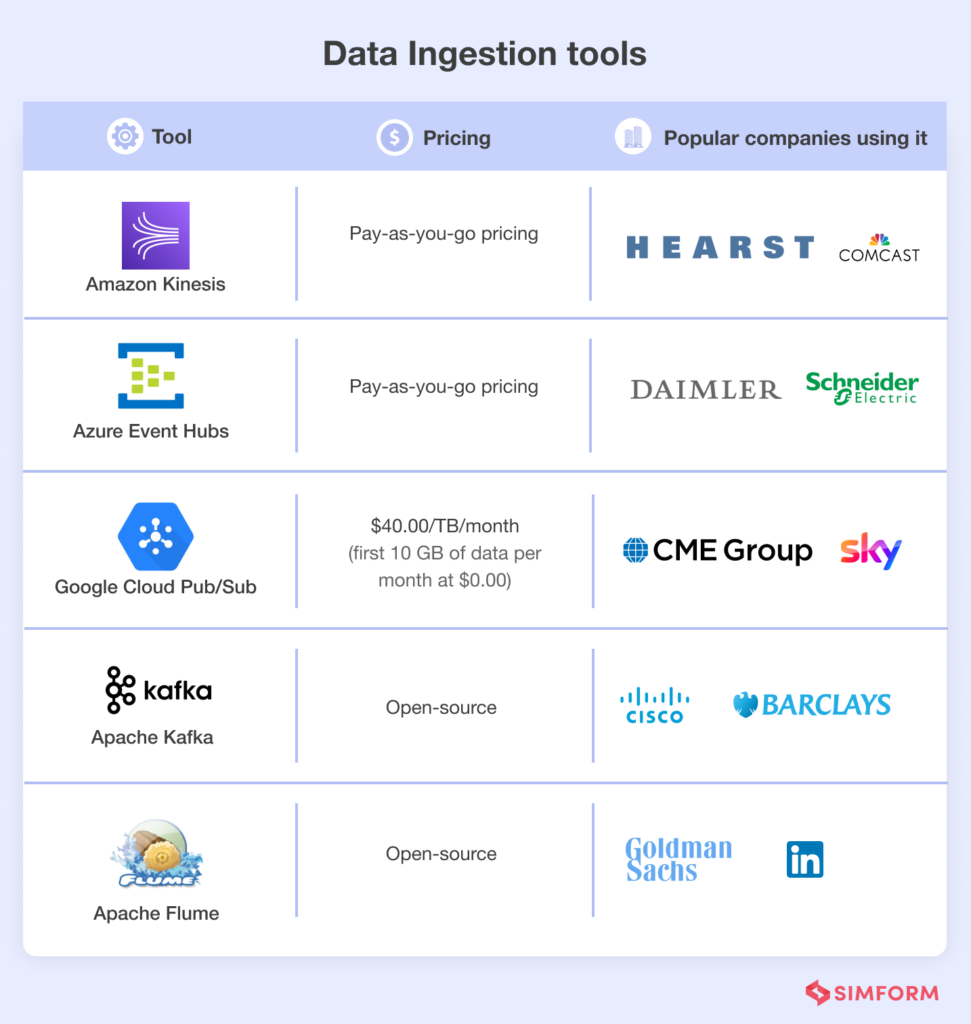

Top Data Ingestion Tools in the Market

Apache Kafka

Apache Kafka is a popular open-source data streaming platform known for its high throughput, scalability, and reliability. It is widely used for building real-time data pipelines and streaming applications.

Apache NiFi

Apache NiFi is a powerful data integration tool that provides a user-friendly interface for automating data flows between systems. It supports real-time and batch processing, making it a versatile tool for data ingestion.

AWS Glue

AWS Glue is a managed ETL (Extract, Transform, Load) service provided by Amazon Web Services. It offers a scalable and serverless architecture for data integration, making it easy to prepare and load data for analytics.

Google Cloud Dataflow

Google Cloud Dataflow is a fully managed service for stream and batch data processing. It simplifies the process of building and executing data pipelines, providing a unified programming model.

Azure Data Factory

Azure Data Factory is a cloud-based data integration service from Microsoft. It allows users to create, schedule, and orchestrate data workflows, supporting both batch and real-time data ingestion.

How to Choose the Right Data Ingestion Tool

Choosing the right data ingestion tool is crucial for building a robust and efficient data pipeline. Factors such as data volume, scalability, integration capabilities, ease of use, and cost should be carefully considered when selecting a tool. Choosing the right data ingestion tool depends on various factors, including:

- Data Volume and Velocity: The amount and speed of data generated by the organization.

- Scalability: The tool’s ability to handle growing data volumes.

- Integration Capabilities: Compatibility with existing data sources and analytics platforms.

- Ease of Use: User interface and learning curve for the team.

- Cost: Licensing, maintenance, and infrastructure costs.

In conclusion, selecting the right data ingestion tool is not just about addressing immediate needs but also about anticipating future requirements. Organizations should choose tools that not only meet their current data management demands but also have the flexibility to scale and adapt to evolving data needs. By carefully considering these factors, businesses can implement data ingestion solutions that support long-term growth and innovation.

Steps Involved in the Data Ingestion Process

- Identify Data Sources: Determine the sources of data to be ingested.

- Select Ingestion Method: Choose between batch processing or real-time processing based on business needs.

- Data Collection: Collect data using APIs, data streams, or other methods.

- Data Transformation: Cleanse and format the data for consistency.

- Data Loading: Load the transformed data into a storage solution for analysis.

- Monitoring and Maintenance: Continuously monitor data pipelines to ensure they function correctly.

Best Practices for Efficient Data Ingestion

- Automate Data Workflows: Use automation to reduce manual intervention and errors.

- Ensure Data Quality: Implement validation and cleansing to maintain high data quality.

- Scalability: Design pipelines that can scale with increasing data volumes.

- Security: Protect data with encryption and access controls.

- Documentation: Maintain clear documentation of data ingestion processes.

Challenges in Data Ingestion and How to Overcome Them

Data ingestion presents several challenges, including:

- Data Consistency: Ensuring data consistency across different sources.

- Latency: Reducing delays in data processing for real-time insights.

- Scalability: Managing large data volumes without degrading performance.

- Data Security: Protecting sensitive data during ingestion.

Case Studies: Successful Data Ingestion Implementations

Case Study 1: E-commerce Platform

An e-commerce company leveraged Apache Kafka to build a real-time data ingestion system that captures customer interactions, transaction data, and inventory updates. By implementing real-time data processing, the company improved customer experience through personalized recommendations and real-time inventory management, which reduced stockouts and increased sales.

Case Study 2: Financial Services

A leading financial institution adopted AWS Glue for batch data ingestion, allowing it to consolidate data from various sources like banking transactions, customer profiles, and market data. This implementation enabled daily financial reporting, compliance monitoring, and enhanced fraud detection capabilities, ensuring data accuracy and regulatory adherence.

Case Study 3: Healthcare Provider

A healthcare organization used Google Cloud Dataflow to ingest and process patient data from electronic health records (EHR), lab results, and wearable devices. This real-time data ingestion capability allowed healthcare professionals to monitor patient health continuously, detect early signs of health deterioration, and deliver timely interventions, ultimately improving patient outcomes and reducing hospital readmissions.

Case Study 4: Logistics Company

A global logistics company implemented Azure Data Factory for batch and real-time data ingestion to monitor its supply chain operations. By integrating data from GPS devices, RFID sensors, and warehouse management systems, the company optimized route planning, reduced delivery times, and improved overall operational efficiency. This approach also allowed them to quickly identify and resolve bottlenecks in the supply chain.

The Future of Data Ingestion

The future of data ingestion will be shaped by advancements in AI, machine learning, and automation. As organizations continue to generate more data, the demand for scalable and real-time data ingestion solutions will grow. Additionally, the adoption of edge computing will drive the need for localized data ingestion capabilities.

Conclusion

Data ingestion is a foundational component of modern data management strategies. By leveraging the right tools and processes, businesses can ensure they have access to accurate and timely data to drive informed decision-making. As technology evolves, staying updated with the latest data ingestion techniques and best practices will be crucial for maintaining a competitive edge.

What is Data Ingestion?

Data ingestion is the process of collecting, transforming, and loading data from various sources into a storage system for analysis.

2. What are the different types of data ingestion?

The two main types of data ingestion are batch processing and real-time processing.

3. What are the main components of a data ingestion pipeline?

The main components include data sources, data collection methods, data transformation, and data loading.

4. What are some popular data ingestion tools?

Popular tools include Apache Kafka, Apache NiFi, AWS Glue, Google Cloud Dataflow, and Azure Data Factory.